Hardware to Prove Humanity

Over the past couple months, I’ve been working on a hardware+ML approach to prove that a human being is physically present at the moment of an online interaction.

This ideally tackles a growing problem: current anti-bot systems (CAPTCHAs, browser fingerprinting, behavioral models, etc.) rely on behavioral inference or credentials, but they don’t actually confirm life/present human activity. And as bots get increasingly skilled at mimicking human behavior and generating convincingly human-like output, those methods will only get less reliable.

This blog post serves as an overview of my solution.

A Physiological Approach to Presence

I’m trying to take a bio-based approach that measures human electrical and autonomic activity to confirm "a human is here" in real time. Specifically, I built a prototype headband with sensors for electrodermal activity (EDA, a skin conductivity measure), photoplethysmography (PPG, for heart-rate), EEG (brain-wave) patterns via dry electrodes/IR sensors, and an IMU (accelerometer/gyroscope) for movements. On the ML side, so far I've created a model that analyzes these signals and classifies whether they come from a living human wearing the device or not (i.e. detecting if it’s a real person vs. a spoofed or absent user).1 The idea is similar to biometric liveness detection used in security (e.g. face ID checks if you blink), but applied in a multimodal, continuous, real-time way for general online interactions rather than one specific biometric.

I’m calling this Dydema, inspired by the Greek διάδημα (diádēma), the root of diadem, meaning “band” or “binding around.”2

NOTE: this isn’t a trust or identity system. It doesn’t try to decide who you are or whether you’re trustworthy, just that you’re alive and physically present right now. The driving idea is that even as internet trust systems improve, they’ll likely have trouble dealing with ever-more convincing AI agents. This project is meant to sit underneath all of that. It’s ideally a grounding layer that brings measurable human presence back into digital spaces, and it’s something we can hopefully build future trust systems on, not patch over or replace with.

Why I Think This Might Work

These physiological signals come from several systems all running on their own rhythms but constantly affecting each other. The result is a messy, interdependent pattern that changes from moment to moment. That kind of natural variability is easy to measure, but extremely hard to fake. Even small correlations, like how heart rate and skin conductance shift together, show up differently in every person and can’t be easily replayed or simulated by a static or synthetic system.3

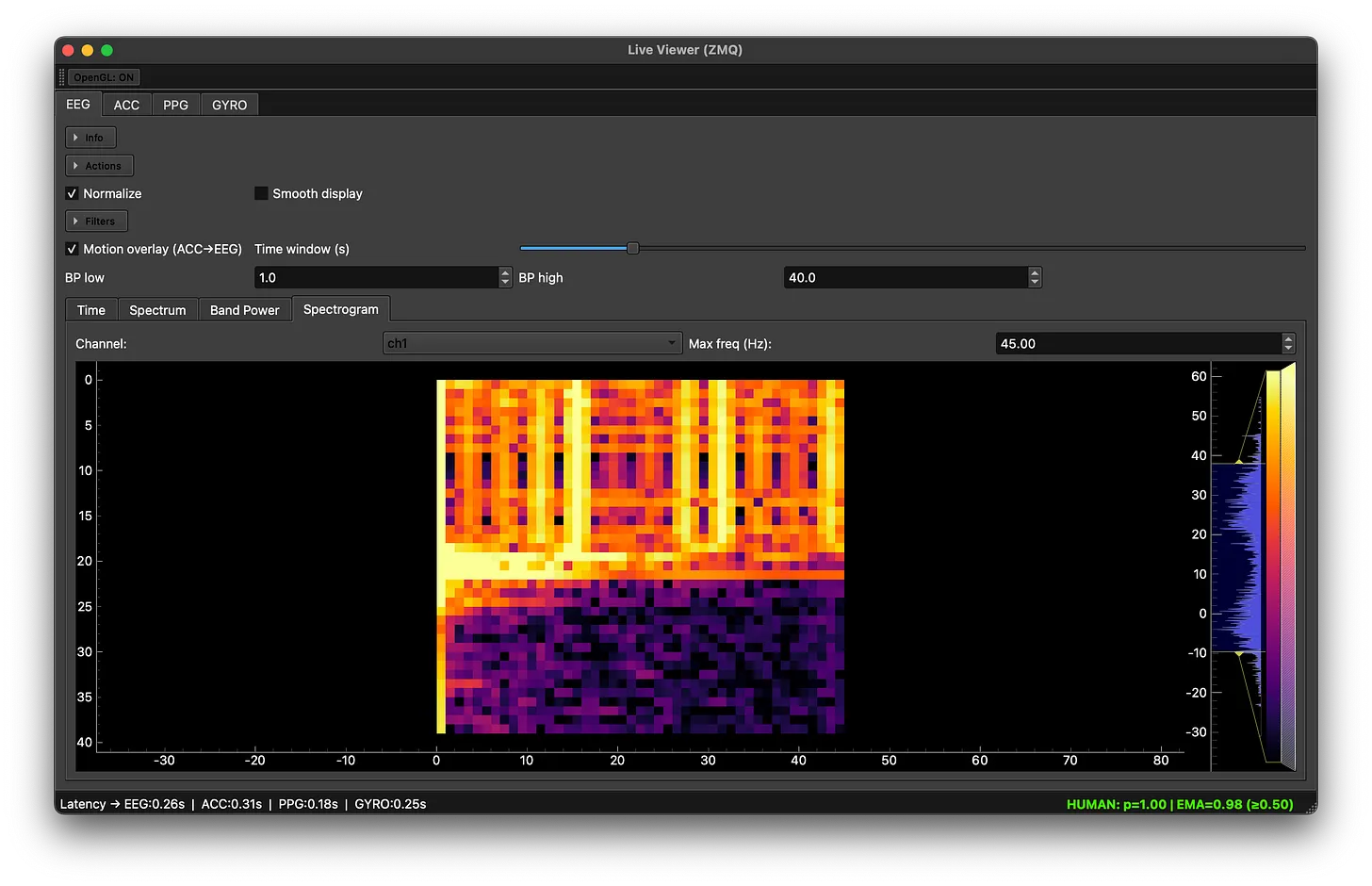

Here's a visualization program I made for the prototype. This is a spectrogram of EEG channel #1 voltage, with time on Y axis and Frequency on the X axis. Guess when I put it on.

Of course, spoofing is still a serious concern. An attacker could try to spoof the system using recorded signals or a biometric simulator. To counter this, the system can analyze signal dynamics for inconsistencies — like unnatural timing, noise profiles, or lack of physiological variability — and also incorporate challenge-response mechanisms (e.g. prompting a user to blink or take a breath) when anomalies are detected. Additionally, pairing each device with a secure hardware ID and cryptographic nonces can ideally ensure that responses are fresh and bound to a specific device session, making replay attacks a lot harder. For instance, if the signals look weird, the system could ask for a small head movement, a blink, or a deep breath, and subsequently verify both the natural physiological response and the matching cryptographic handshake.

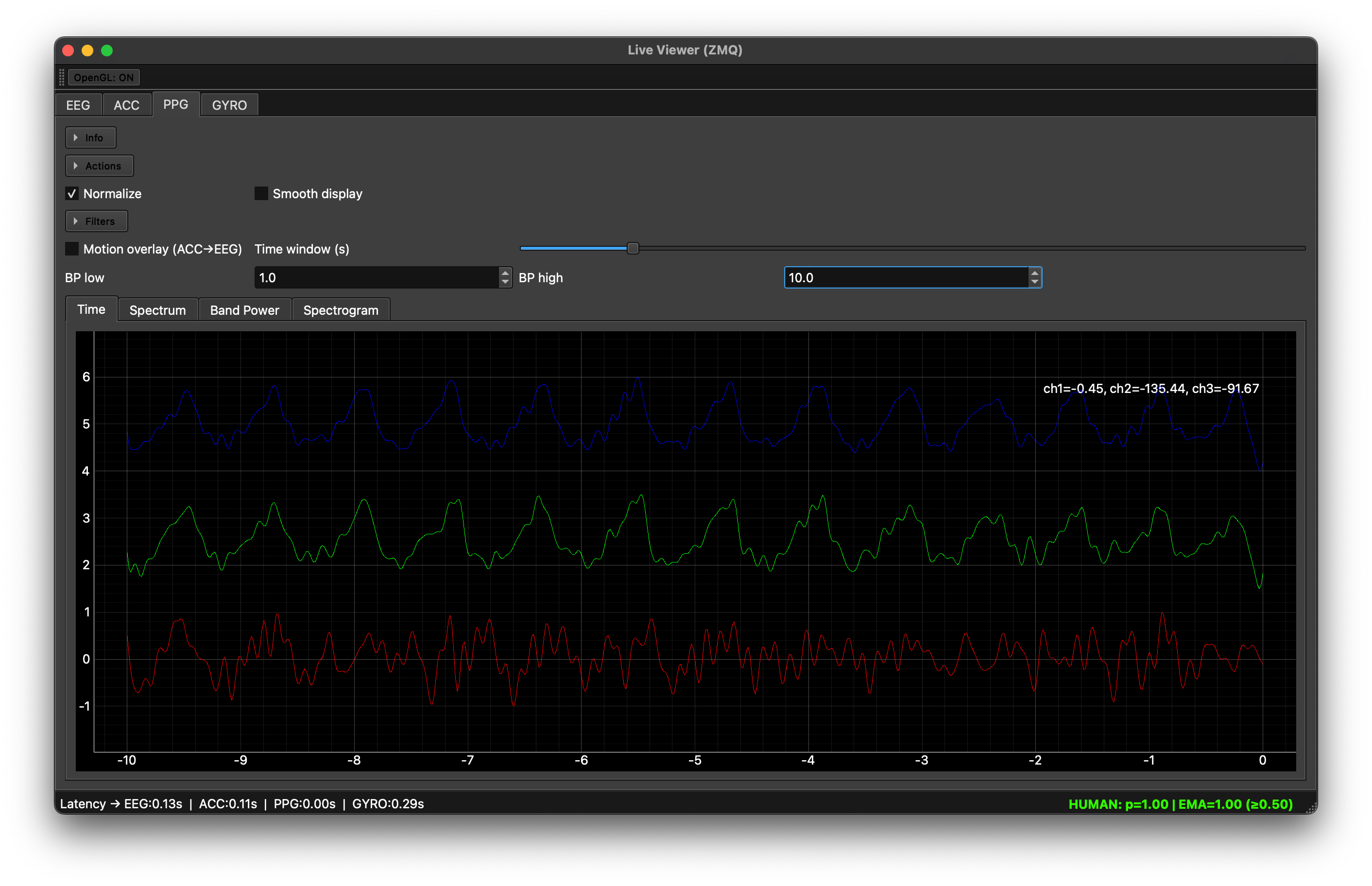

Same visualization program. This is a trace of all three PPG channels, measuring blood flow. Time is on the X axis, and normalized voltage is on the Y axis.

Designing for Privacy

Privacy and security is by far the toughest part here. The goal is only to verify that a real human is present right now – not to id, track, or infer anything else (no personal info, no emotional or cognitive profiling – I have some reeeeaally serious issues with the biotech companies trying to do stuff like "measure your focus/attention level"). Ideally, most processing would eventually run on-device, outputting just a binary “human or not” flag. Not entirely sure how to get there, yet.

In the current prototype, data simply streams to my server for analysis, but moving that processing onto the wearable or a personal device is high priority. Any data that might need to be sent (e.g. if a platform requires verification) obviously should be minimized and anonymized. The eventual goal here is a hybrid pipeline where inference happens on-device and only signed presence proofs are sent to external systems. If this ends up growing, open audits and third-party reviews of the hardware and code would be necessary. Essentially, if Dydema can’t be privacy-safe, it will have failed.

Current Status

This project is in its early days. I have a working prototype headband and a very small self-collected dataset, but no large-scale validation yet (so far it’s mostly just me testing it on myself to be honest). I also made a mock social platform where interaction is successfully gated by a valid "presence token."

The concept seems to work in principle – the model can easly distinguish when the device is worn by a person versus not – but I haven’t yet rigorously red-teamed it yet. There’s a ton still to figure out and prove: e.g. how well do these signals generalize across people? How easily can someone spoof it? How do we calibrate for each user’s physiology quickly? These are some open questions I’m working on.

The Long-Term Vision

If this approach does pan out, I imagine it becoming a kind of protocol or standard that developers could plug into their apps and platforms – basically “presence as an API”. For example, a social network or forum could require a live-human check (via a device like Dydema or any compatible hardware) before allowing posts or messages, instantly filtering out bot activity. Importantly, this wouldn’t convey your identity, just that you’re a real human. It could be one piece of a broader solution to keep online spaces human-only when needed, especially as we head into an era where bots and AI agents are everywhere. There are other ideas in this space, from social-graph approaches to things like Worldcoin’s iris scan – all with their own trade-offs (personally, the fact that Worldcoin is essentially buying people's biometrics in exchange for crypto and then building that into a global identity system really concerns me, and I don't believe it'll work). Dydema’s angle is real-time, de-identified biological signals as proof of life, which avoids some issues like requiring an ID or accumulating personal data, but admittedly has its own challenges in hardware and user acceptance.

Feedback is VeryWelcome

(Here is the landing page for the project. It's more a marketing-esque high level overview, but right now it's where I'm collecting interest and feedback).

I’d appreciate some feedback on all of this, e.g. the technical feasibility, ideas for making it more robust against spoofing or replay attacks, and thoughts on how to keep such a system open, verifiable, and privacy-safe. Where are the holes in this idea? Are there existing projects or research I should know about (or that you’ve worked on)? I’m also curious about any suggestions on the human factors side: e.g. would people even wear a device like this for high-trust interactions online? Do you think people eventually would as bots get better, if they wouldn't do so now?

TLDR: The internet is quickly filling with bots that look and act human, and we're struggling to keep up. I'm attempting an experiment in going lower-level to address that, with bio-signals. Thank you in advance for any ideas or critiques!

Hell, logistic regression is already good enough to classify “on a person” vs “not on a person” with extremely high accuracy (>= 99% per window). The issue comes from adversarial robustness. A truly robust model will need to be much larger, require much more training data, and significant investment into red-teaming and he aforementioned adversarial robustness. Even with all of this, I expect the ML to be an order of magnitude easier than trying to derive some metric across a large population (e.g. “focus”).↩

I think this hits harder than Stephen, inspired by the Greek στέφανος (stéphanos), meaning “crown” or “wreath”.↩

Try getting an EEG motor imagery model to work just as well on 5 different people, both before and after they’ve had a cup of coffee. It can be done. It’s not very fun.↩